Asking why: moving beyond error detection to failure mode and effects analysis

Laboratory results are often consulted in the course of clinical decision making. An error in the laboratory total testing process can have an amplified effect on downstream clinical decisions and outcomes (1). Consequently, recognizing and monitoring errors that may affect the total testing process to ensure quality and minimize patient harm has become a key focus in modern clinical laboratory operations. Laboratory medicine is one of the earliest adopters among all clinical disciplines of quality concepts from the other industries. As early as the 1950s and 1960s, the statistical concepts related to detecting a deviation in analytical performance have been widely explored and implemented in routine clinical laboratory testing (2,3). Early on, the focus was on the development of internal quality control concepts that are run alongside patient samples. Two key concepts emerged from this phase that have become the cornerstone of current laboratory quality control practice.

Shewhart control chart (also known as process-behavior chart) was originally described by Walter A. Shewhart, who worked at the Bell Labs, as a means of reducing variation in the manufacturing process (2). Levey and Jennings repurposed the chart as a means of visualizing and monitoring laboratory quality control results (2). This control chart subsequently became more widely known as the Levey-Jennings control chart among laboratory practitioners. Similarly, the Western Electric rules were first described by the Western Electric Company for engineers as decision rules in statistical process control for interpreting out-of-control conditions in a consistent manner. They were adopted by clinical laboratories, and are now more commonly known as the ‘Westgard’ rules (3). Beyond the traditional internal quality control concepts, there are renewed interests in the use of patient-based real-time quality control techniques to monitor laboratory performance recently and it is an area of great activity (4,5).

Initially, the capability of a statistical technique in detecting error is often measured in terms of statistical power or the probability of error detection. The false-positive rate was the main consideration as the limitation of a technique (3). Under these considerations, a statistical method is preferred when the probability of detecting an error is high (i.e., the internal quality control has a high probability of flagging when an error is present) and the false-positive rate is low (i.e., there are few false internal quality control rejection). However, these matrices often do not provide a direct indication of potential patient harm. For example, an internal quality control set-up might have high probability of detection (e.g., by setting a narrow control limit) but is performed infrequently. An error may still be missed under this scenario simply because there was no quality control testing performed after an error has occurred. The undetected error may lead to reporting of erroneous results. Consequently, more nuanced consideration has been developed to relate the statistical performance to clinical risks, such as the number of patients affected before error detection and the probability of patient harm (6).

Beyond the statistical techniques that are used to detect a deviation in analytical performance, the concepts for classifying errors have also received much attention. The International Federation for Clinical Chemistry and Laboratory Medicine has developed a set of quality indicators that included clinically important errors in the pre-analytical, analytical, and post-analytical phases of the total testing process (7). Clinical laboratories are encouraged to adopt them to monitor their total testing process and benchmark themselves among their peers (8). At the same time, empirical evaluation before the deployment of a laboratory method, laboratory proficiency testing, and accreditation and regulatory framework was developed to safeguard minimum standards in laboratory practice.

The brief history of the quality journey of laboratory medicine above has shown how far the discipline has come in terms of adopting a culture that is quality and safety conscious. However, a closer examination reveals a heavy emphasis on error detection and less on error analysis and prevention. In other words, laboratory medicine has become competent at recognizing the symptoms of error but may not go far enough to determine the root cause of the error. For example, the laboratory may detect a large 1:3S internal quality control failure. After a sequence of troubleshooting steps, which may involve checking for pre- or post-post-analytical human error, testing of fresh quality control material, recalibration, and testing on an alternate instrument, the laboratory may be limited by any additional steps it can undertake to determine the root cause (9). This approach is in part due to the lack of access to raw instrument data, and the knowledge and expertise to understand them. It has become increasingly the norm where the laboratory surrenders the analytical expertise to the assay and instrument manufacturers in exchange for convenience. Yet, the instrument complexity of laboratory testing continues to increase over time.

The relatively low transfer of technical expertise to the end laboratory users performing the tests is not commensurate with the high clinical risk of the tasks performed. Indeed, it is not uncommon today for ‘key operator’ training to be completed within a same day for an analyser, and within a week for more complex automation systems. The role of the laboratory user has been relegated to one that notes the alarm and informs the manufacturer’s technical support to determine a potential root cause and solution. Often, the laboratory has limited technical recourse of what they can do when they do not agree with the assessment of the manufacturer. The asymmetry in technical expertise and data access also stifle intelligent conversation with the manufacturer that is needed for troubleshooting.

To further elevate the reliability of the laboratory testing process, the current quality practice in laboratory medicine needs to evolve. Root cause analysis should be performed to identify the cause of an instrument failure, rather than simply dealing with the symptoms repeatedly. Detecting and comparing the symptoms of underlying instrument error, such as increased imprecision or systematic bias, are not as helpful as knowledge about the root cause of the error, e.g., pipette valve leakage.

The manufacturing industry has long adopted failure mode and effects analysis (FMEA) as a way to improve quality and reduce defects (10). Briefly, FMEA originates as an engineering technique in the aerospace and automobile industry to define, identify and eliminate known and potential problems or errors from a system, design, process and/or service before they reach the customer. In general, FMEA consists of three steps: (I) identify potential failures, including their cause and effect; (II) evaluate and prioritize the failure modes; and, (III) propose actions to eliminate or reduce the chance of failure. The failure mode identification is typically performed by a team of personnel with a variety of relevant expertise based on knowledge of the process and data collected. The possible causes of a specific problem can be sorted, identified, and visualized with a cause-and-effect diagram (also known as Ishikawa or “fishbone” diagram).

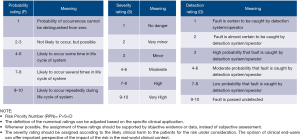

Subsequently, the three aspects for each failure mode are rated on a scale of 1 to 10: (I) severity: the consequences if this failure happens, with 10 being most severe; (II) occurrence: the probability of this failure occurring, with 10 being very likely to happen; (III) detection: the probability of the failure being detected before the impact of the effect is realized, with 10 being most unlikely to be detected by existing design. The product of the rating for these three aspects is called the risk priority number (RPN, see Figure 1). Action plans targeted at failure modes with high RPN can be proposed and implemented to eliminate or reduce these failures. This allows a focused and conscious effort to improve system reliability continuously. FMEA have been practiced with success by some fertility laboratories as they carry very high clinical risks (11,12). An example of the application FMEA on the analytical phase of point-of-care glucometers using data from a proficiency testing program (13) is shown in Table 1. Although it has been previously advocated for in laboratory medicine (14), its uptake remains limited and focused on the extra-analytical phases (15-17).

Table 1

| Instrument | Function | Potential failure mode | Potential cause | Failure effect | Existing controls, counter measures, detection methods | Probability | Severity | Detection | Risk priority number (RPN) | Recommended action, person responsibility, target date |

|---|---|---|---|---|---|---|---|---|---|---|

| Glucometer | Measure glucose concentration of blood sample | Results reported for the wrong patient | Patient mix-up by operator | Results reported do not correspond to the actual glucose concentration of the patient | Use barcode scanner for patient identification | 5 | 8 | 3 | 120 | E.g., retraining of staff, change out of poor barcode printing equipment, check data transmission, implement electronic data transmission |

| Instrument failure | Unknown | Results produced are inaccurate. Delay in test results. | QC must be performed daily prior to use |

2 | 8 | 4 | 64 | E.g., Send faulty instrument for servicing with vendor | ||

| QC is not performed properly prior to measurement | Negligence of operator; QC failure | Unnecessary (resource intensive) investigation of QC failure, unnecessary delay in patient testing | Device is locked if QC is not performed prior to use |

7 | 2 | 5 | 70 | E.g., Retraining of staff, check QC inventory management |

Furthermore, documenting the type and impact of different technical faults and comparing them among peer laboratories and manufacturers will facilitate the identification of problems that may be present across geographical regions, facilitate the troubleshooting process. It will help pinpoint weak links in the total testing process that can be improved. Importantly, not all technical faults manifest as poor precision or bias profile in proficiency testing schemes owing to potential matrix effects. Certainly, it is not uncommon for the root cause of unreliable laboratory processes to remain undiscovered for prolonged periods, exposing patients to clinical harm, as shown in the case of the prostate specific antigen and insulin-like growth factor 1 assays (1,18). As such, a comparison of technical faults will allow the laboratory users to have an additional dimension of reliability to make more informed decisions over the choice of the analytical system. Ultimately, it is hoped that such measurement and comparison of technical faults will compel the in vitro diagnostic companies to adopt the principles of quality by design, which aims to minimize factors that may contribute to poor reliability of the overall system.

The recent Boeing 737 Max tragedies hold important lessons for laboratory medicine. Post-crisis analysis has identified the lack of appropriate pilot training, poor communication between pilot/trainer/designer/engineer, insufficient product testing prior to commercialization, and deregulation and reduced oversight as potential contributing factors (19,20). Notably, FMEA was not conducted on the Maneuvering Characteristic Augmentation System despite preceding errors, which is one of the major causes for the crash (21). It is incumbent upon laboratory medicine to uphold its standard and avoid the missteps that have resulted in national tragedies. This lesson shouldn’t be lost on laboratory staff as the commonest reported cause of failure in American laboratory accreditation visits was competence of staff (22). Also, the introduction of new equipment into laboratories has been identified as a cause of improved performance in external quality assurance. This improvement was attributed to more consistent training of staff by professional trainers rather than a new platform (23).

Conclusions

Looking forward, laboratory medicine should demand greater access to the instrument data and more detailed guided troubleshooting steps to resolve technical faults. Correlation analysis between instrument data with observed errors in measurement can enable laboratories to identify the root cause for errors and potentially develop a predictive maintenance program for the equipment. External quality assurance schemes may also need to be redesigned to include objective documentation of technical faults. Such error data may come directly from the instruments or a summary of the service report. The purpose of such documentation is to allow industry benchmarking and improvement.

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editor (Mark A. Cervinski) for the series “Patient Based Quality Control” published in Journal of Laboratory and Precision Medicine. The article has undergone external peer review.

Conflicts of Interest: The authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jlpm-20-26). The series “Patient Based Quality Control” was commissioned by the editorial office without any funding or sponsorship. TPL serves as an unpaid editorial board member of The Journal of Laboratory and Precision Medicine from Feb 2019 to Jan 2021. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of this work in ensuring that questions related to the accuracy or integrity of any part of this work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Liu J, Tan CH, Badrick T, et al. Moving sum of number of positive patient result as a quality control tool. Clin Chem Lab Med 2017;55:1709-14. [Crossref] [PubMed]

- Levey S, Jennings ER. The use of control charts in the clinical laboratory. Am J Clin Pathol 1950;20:1059-66. [Crossref] [PubMed]

- Westgard JO, Groth T, Aronsson T, et al. Performance characteristics of rules for internal quality control: probabilities for false rejection and error detection. Clin Chem 1977;23:1857-67. [Crossref] [PubMed]

- Badrick T, Cervinski M, Loh TP. A primer on patient-based quality control techniques. Clin Biochem 2019;64:1-5. [Crossref] [PubMed]

- Loh TP, Cervinski MA, Katayev A, et al. Recommendations for laboratory informatics specifications needed for the application of patient-based real time quality control. Clin Chim Acta 2019;495:625-9. [Crossref] [PubMed]

- Parvin CA, Baumann NA. Assessing Quality Control Strategies for HbA1c Measurements From a Patient Risk Perspective. J Diabetes Sci Technol 2018;12:786-91. [Crossref] [PubMed]

- Plebani M, Sciacovelli L, Aita A. Quality Indicators for the Total Testing Process. Clin Lab Med 2017;37:187-205. [Crossref] [PubMed]

- Badrick T, Gay S, Mackay M, et al. The key incident monitoring and management system - history and role in quality improvement. Clin Chem Lab Med 2018;56:264-72. [Crossref] [PubMed]

- Kinns H, Pitkin S, Housley D, et al. Internal quality control: best practice. J Clin Pathol 2013;66:1027-32. [Crossref] [PubMed]

- Ben-Daya M. Failure Mode and Effect Analysis. Handbook of Maintenance Management and Engineering. Springer London, 2009:75-90.

- Intra G, Alteri A, Corti L, et al. Application of failure mode and effect analysis in an assisted reproduction technology laboratory. Reprod Biomed Online 2016;33:132-9. [Crossref] [PubMed]

- Rienzi L, Bariani F, Dalla Zorza M, et al. Comprehensive protocol of traceability during IVF: the result of a multicentre failure mode and effect analysis. Hum Reprod 2017;32:1612-20. [Crossref] [PubMed]

- Aslan B, Stemp J, Yip P, et al. Method precision and frequent causes of errors observed in point-of-care glucose testing: a proficiency testing program perspective. Am J Clin Pathol 2014;142:857-63. [Crossref] [PubMed]

- Chiozza ML, Ponzetti C. FMEA: a model for reducing medical errors. Clin Chim Acta 2009;404:75-8. [Crossref] [PubMed]

- Capunzo M, Cavallo P, Boccia G, et al. A FMEA clinical laboratory case study: how to make problems and improvements measurable. Clin Leadersh Manag Rev 2004;18:37-41. [PubMed]

- Magnezi R, Hemi A, Hemi R. Using the failure mode and effects analysis model to improve parathyroid hormone and adrenocorticotropic hormone testing. Risk Manag Healthc Policy 2016;9:271-4. [Crossref] [PubMed]

- Romero A, Gómez-Salgado J, Romero-Arana A, et al. Utilization of a healthcare failure mode and effects analysis to identify error sources in the preanalytical phase in two tertiary hospital laboratories. Biochem Med (Zagreb) 2018;28:020713 [Crossref] [PubMed]

- Algeciras-Schimnich A, Bruns DE, Boyd JC, et al. Failure of current laboratory protocols to detect lot-to-lot reagent differences: findings and possible solutions. Clin Chem 2013;59:1187-94. [Crossref] [PubMed]

- Robison P, Johnsson J. Boeing’s Push to Make Training Profitable May Have Left 737 Max Pilots Unprepared. Published December 20, 2019.

- Kitroeff N, Gelles D. Before Deadly Crashes, Boeing Pushed for Law That Undercut Oversight. New York Times. Published October 27, 2019.

- Komite Nasional Keselamatan Transportasi Republic of Indonesia. Final Report No. KNKT.18.10.35.04PT. Lion Mentari Airlines Boeing 737-8 (MAX); PK-LQP Tanjung Karawang, West Java, 29 October 2018.

- Chittiprol S, Bornhorst J, Kiechle FL. Top Laboratory Deficiencies Across Accreditation Agencies. Problems with documenting personnel competency top the list. Clin Lab News 2018;44.

- Punyalack W, Graham P, Badrick T. Finding best practice in internal quality control procedures using external quality assurance performance. Clin Chem Lab Med 2018;56:e226-e228. [Crossref] [PubMed]

Cite this article as: Lim CY, Loh TP, Badrick T. Asking why: moving beyond error detection to failure mode and effects analysis. J Lab Precis Med 2020;5:29.